Turn your research insights into actionable recommendations

I could tell I was steadily improving in my report writing. People were more engaged when I used video clips to show what the different users were doing or feeling. I was able to incorporate more infographics and annotations when doing usability testing. My reports were more clear and straightforward. Colleagues started to use my research because it became more accessible to them.

At the end of one presentation, my colleague approached me and asked what I recommended based on the research. I was a bit puzzled. I didn’t expect anyone to ask me this kind of question. By that point in my career, I wasn’t aware that I had to make recommendations based on the research insights. I could talk about the next steps regarding what other research we had to conduct. I could also relay the information that something wasn’t working in a prototype, but I had no idea what to suggest.

Over time, more and more colleagues asked for these recommendations. Finally, I realized that one of the key pieces I was missing in my reports was the “so what?” The prototype isn’t working, so what do we do next? Because I didn’t include suggestions, my colleagues had a difficult time marrying actions to my insights. Sure, the team could see the noticeable changes, but the next steps were a struggle, especially for generative research.

Without these suggestions, my insights started to fall flat. My colleagues were excited about them and loved seeing the video clips, but they weren’t working with the findings. With this, I set out to experiment on how to write recommendations within a user research report.

How to write recommendations

For a while, I wasn’t sure how to write recommendations. And, even now, I believe there is no one right way. When I first started looking into this, I started with two main questions:

What do recommendations mean to stakeholders?

How prescriptive should recommendations be?

When people asked me for recommendations, I had no idea what they were looking for. I was nervous I would step on people’s toes and give the impression I thought I knew more than I did. I wasn’t a designer and didn’t want to make whacky design recommendations or impractical suggestions that would get developers rolling their eyes.

When in doubt, I dusted off my internal research cap and sat with stakeholders to understand what they meant by recommendations. I asked them for examples of what they expected and what made a suggestion “helpful” or “actionable.” I walked away with a list of “must-haves” for my recommendations. They had to be:

Flexible. Just because I made an initial recommendation did not mean it was the only path forward. Once I presented the recommendations, we could talk through other ideas and consider new information. There were a few times when I revised my recommendations based on conversations I had with colleagues.

Feasible. At first, I started presenting my recommendations without any prior feedback. My worst nightmare came true. The designer and developer sat back, arms crossed, and said, “A lot of this is impossible.” I quickly learned to review some of my recommendations I was uncertain about with them beforehand. Alternatively, I came up with several recommendations for one solution to help combat this problem.

Prioritized (to my best abilities). Since I am not entirely sure of the recommendation’s effort, I use a chart of impact and reach to prioritize suggestions. Then, once I present this list, it may get reprioritized depending on effort levels from the team (hey, flexibility!).

Detailed. This point helped me a lot with my second question regarding how in-depth I should make my recommendations. Some of the best detail comes from photos, videos, or screenshots, and colleagues appreciated when I linked recommendations with this media. They also told me to put in as much detail as possible to avoid vagueness, misinterpretation, and endless debate.

Think MVP. Think about the solution with the fewest changes instead of recommending complex changes to a feature or product. What are some minor changes that the team can make to improve the experience or product?

Justified. This part was the hardest for me. When my research findings didn’t align with expectations or business goals, I had no idea what to say. When I receive results that highlight we are going in the wrong direction, my recommendations become even more critical. Instead of telling the team that the new product or feature sucks and we should stop working on it, I offer alternatives. I follow the concept of “no, but...” So, “no, this isn’t working, but we found that users value X and Y, which could lead to increased retention” (or whatever metric we were looking at.

Let’s look at some examples

Although this list was beneficial in guiding my recommendations, I still wasn’t well-versed in how to write them. So, after some time, I created a formula for writing recommendations:

Observed problem/pain point/unmet need + consequence + potential solution

Evaluative research

Let’s imagine we are testing a check-out page, and we found that users were having a hard time filling out the shipping and billing forms, especially when there were two different addresses.

A non-specific and unhelpful recommendation might look like:

Users get frustrated when filling out the shipping and billing form.

The reasons this recommendation is not ideal are:

It provides no context or detail of the problem

There is no proposed solution

It sounds a bit judgemental (focus on the problem!)

There is no immediate movement forward with this

A redesign recommendation about the same problem might look like this:

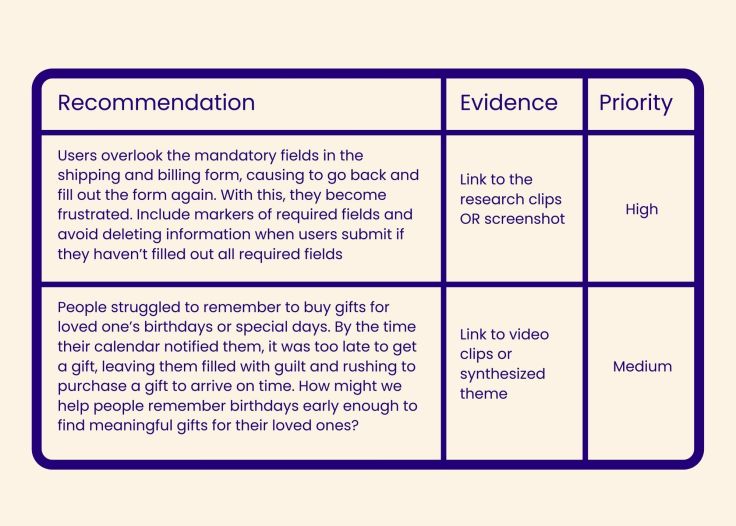

Users overlook the mandatory fields in the shipping and billing form, causing them to go back and fill out the form again. With this, they become frustrated. Include markers of required fields and avoid deleting information when users submit if they haven’t filled out all required fields.

Let’s take another example:

We tested an entirely new concept for our travel company, allowing people to pay to become “prime” travel members. In our user base, no one found any value in having or paying for a membership. However, they did find value in several of the features, such as sharing trips with family members or splitting costs but could not justify paying for them.

A suboptimal recommendation could look like this:

Users would not sign-up or pay for a prime membership.

Again, there is a considerable lack of context and understanding here, as well as action. Instead, we could try something like:

Users do not find enough value in the prime membership to sign-up or pay for it. Therefore, they do not see themselves using the feature. However, they did find value in two features: sharing trips with friends and splitting the trip costs. Focusing, instead, on these features could bring more people to our platform and increase retention.

Generative research

Generative research can look a bit trickier because there isn’t always an inherent problem you are solving. For example, you might not be able to point to a usability issue, so you have to look more broadly at pain points or unmet needs.

For example, in our generative research, we found that people often forget to buy gifts for loved ones, making them feel guilty and rushed at the last minute to find something meaningful but quickly.

This finding is extremely broad and could go in so many directions. With suggestions, we don’t necessarily want to lead our teams down only one path (flexibility!), but we also don’t want to leave the recommendation too vague (detailed). I use How Might We statements to help me build generative research recommendations.

Just reporting the above wouldn’t entirely be enough for a recommendation, so let’s try to put it in a more actionable format:

People struggled to remember to buy gifts for loved one’s birthdays or special days. By the time their calendar notified them, it was too late to get a gift, leaving them filled with guilt and rushing to purchase a meaningful gift to arrive on time. How might we help people remember birthdays early enough to find meaningful gifts for their loved ones?

A great follow-up to generative research recommendations can be running an ideation workshop!

How to format recommendations in your report

I always end with recommendations because people leave a presentation with their minds buzzing and next steps top of mind (hopefully!). My favorite way to format suggestions is in a chart. That way, I can link the recommendation back to the insight and priority. My recommendations look like this:

Overall, play around with the recommendations that you give to your teams. The best thing you can do is ask for what they expect and then ask for feedback. By catering and iterating to your colleagues’ needs, you will help them make better decisions based on your research insights!

Written by Nikki Anderson, User Research Lead & Instructor. Nikki is a User Research Lead and Instructor with over eight years of experience. She has worked in all different sizes of companies, ranging from a tiny start-up called ALICE to large corporation Zalando, and also as a freelancer. During this time, she has led a diverse range of end-to-end research projects across the world, specializing in generative user research. Nikki also owns her own company, User Research Academy, a community and education platform designed to help people get into the field of user research, or learn more about how user research impacts their current role. User Research Academy hosts online classes, content, as well as personalized mentorship opportunities with Nikki. She is extremely passionate about teaching and supporting others throughout their journey in user research. To spread the word of research and help others transition and grow in the field, she writes as a writer at dscout and Dovetail. Outside of the world of user research, you can find Nikki (happily) surrounded by animals, including her dog and two cats, reading on her Kindle, playing old-school video games like Pokemon and World of Warcraft, and writing fiction novels.

Users report unexpectedly high data usage, especially during streaming sessions.

09:46AM24 Sep, 2024

Users find it hard to navigate from the home page to relevant playlists in the app.

11:32AM9 Mar, 2024

It would be great to have a sleep timer feature, especially for bedtime listening.

15:03PM13 May, 2024

I need better filters to find the songs or artists I’m looking for.

4:46PM15 Feb, 2024Log in or sign up

Get started for free

or

By clicking “Continue with Google / Email” you agree to our User Terms of Service and Privacy Policy